Gender bias in popular movies

A computational approach

This page is also available in French, German and brazilian Portuguese.

This is an informal account of the research published in Humanities and Social Sciences Communications by Antoine Mazieres, Telmo Menezes and Camille Roth.

Current debates on gender bias in media stand on the shoulder of a long standing academic endeavor to capture, analyze and deconstruct representations of the feminine and the masculine on a variety of vehicles such as TV, books and radio. Since the 1950’s, researchers have been identifying sexual stereotypes, biases in occupational roles, body staging, marriage and rape, and established strong, stable patterns, for instance women appearing "as dependent on men", "unintelligent", "less competitive", "more sexualized" (Linda Busby, 1975).

These analyses are hard work, requiring many researchers to agree on how to annotate a media, to define a common understanding of features and meanings, and, of course, to assess the content by watching, listening or reading many times over it. This enables the systematization of a deep understanding and appraisal of the representations at stake but limits the possibility to reproduce it at scale and at several points in time to capture underlying trends.

Our work explores the possibility of using artificial intelligence and machine learning algorithms to tackle such limits. On one hand it is not possible for a computer to systematically capture fine representations about concepts such as dependence, intelligence, competitiveness or sexualization, at least for now. On the other hand, algorithms manage to perform tens of millions of simple tasks, such as counting occurrences, in no time. Therefore,

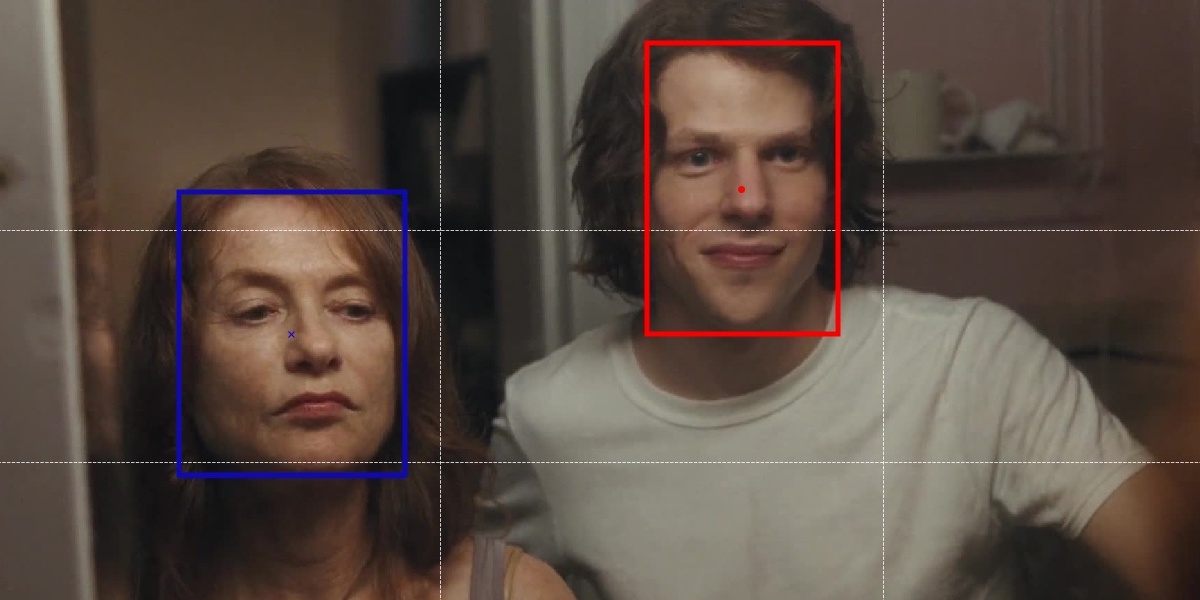

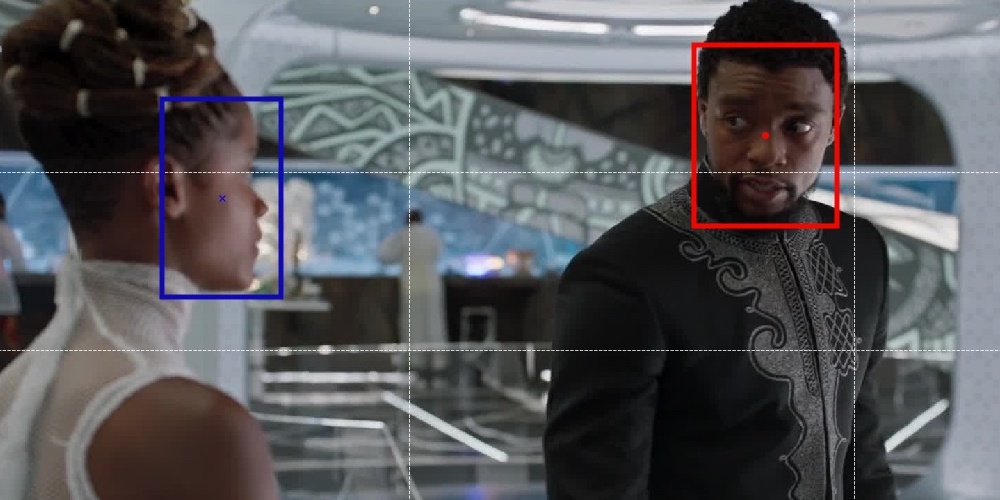

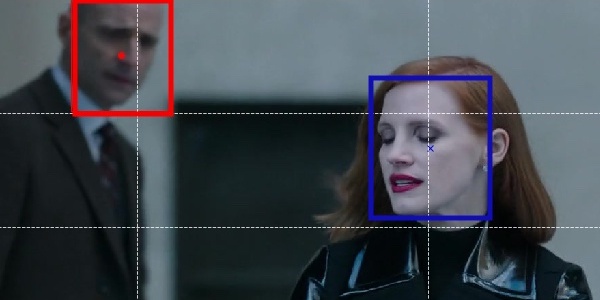

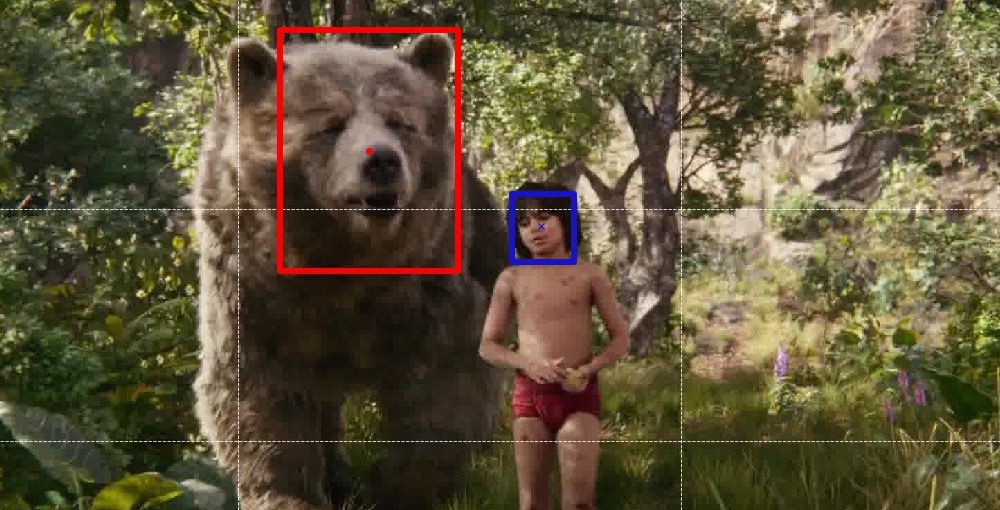

We selected movies for being amply shared on peer-to-peer networks and well documented on IMDb, the idea being to capture works that are both characteristic of cultural representations and influential in shaping them. For each movie we extracted one frame every 2 seconds and applied image analysis algorithms to detect the presence of faces and guess if those were of women or men. Below are examples of successful guesses along with illustrative mistakes. The fact that there are errors does not necessarily discard the whole protocol. What matters is to outline precisely when and how algorithms are mistaken to correct the accuracy of observations on the whole dataset, which is comprised of more that 12 million images.

Ratio of female faces and its evolution

On average, over the whole dataset, only 34.52% of faces displayed in a movie are detected as female. To illustrate informally what this ratio means in practice, here are a few examples of how some top grossing movies are represented. First, among movies with a high percentage of male faces (ratio of female faces < 25 %) we find movies such as Pirates of the Caribbean (2007), Star Wars (2005), Matrix (2003), Independence Day (1996) or Forest Gump (1994), all with a ratio of around 23%. Movies such as The Hunger Games (2014), Jurassic World (2015), Rogue One (2016) and Gravity (2013) lie around a female-male parity, with a ratio of between 45% and 55%. Lastly, the movie with the highest ratio of female faces (68%) is Bad Moms (2016), closely followed by movies such as Sisters (2015), Life of the Party (2018) and Cake (2014).

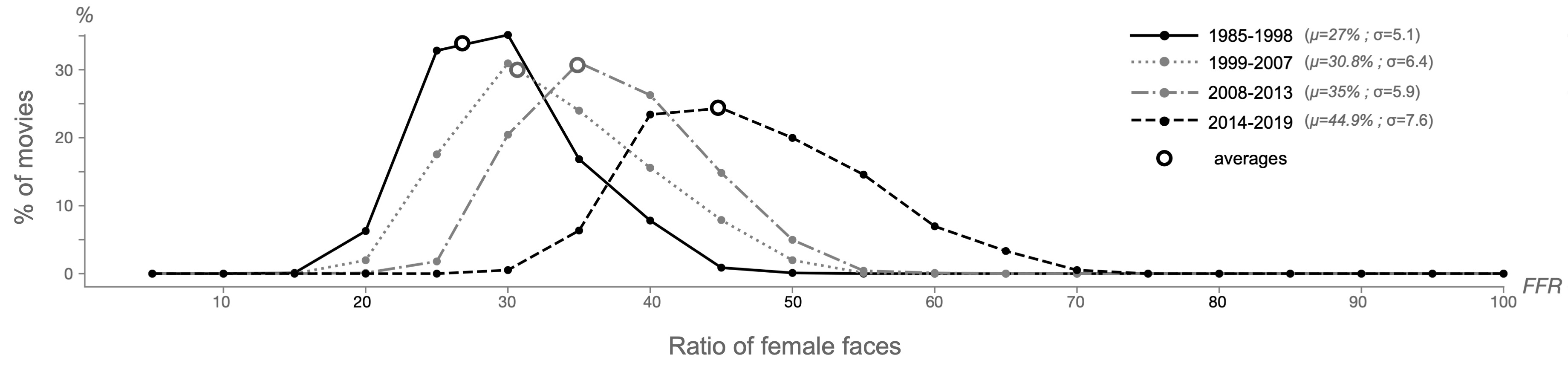

How is this ratio evolving with time? We temporally split our dataset into 4 chunks comprised of an equal number of movies. We observed a significant increase of the number of women faces. From 1985 to 1998, this ratio is of 27% and reaches a point closer to a female-male parity in the most recent period, from 2014 to 2019, with a ratio of 44.9%. Also, the diversity of situations (formally, the variance of this ratio) increases. That means that films produced recently tend to delve into a more diverse range of on-screen women-men shares.

Caution is required in the interpretation of these results. Bottom line is: we measured the number of faces which is a good proxy of physical on-screen presence. We are dealing with over/under-representations, and not at all with how women or men are actually represented. There is much tedious research to be done before leaping from the representativeness we assessed to the actual representations of women and men in movies. There may be strong incentives for production companies to foster more female presence in movies, but this effort can easily end in a mere “purplewashing” re-enacting conservative stigmatization of gender.

Other results

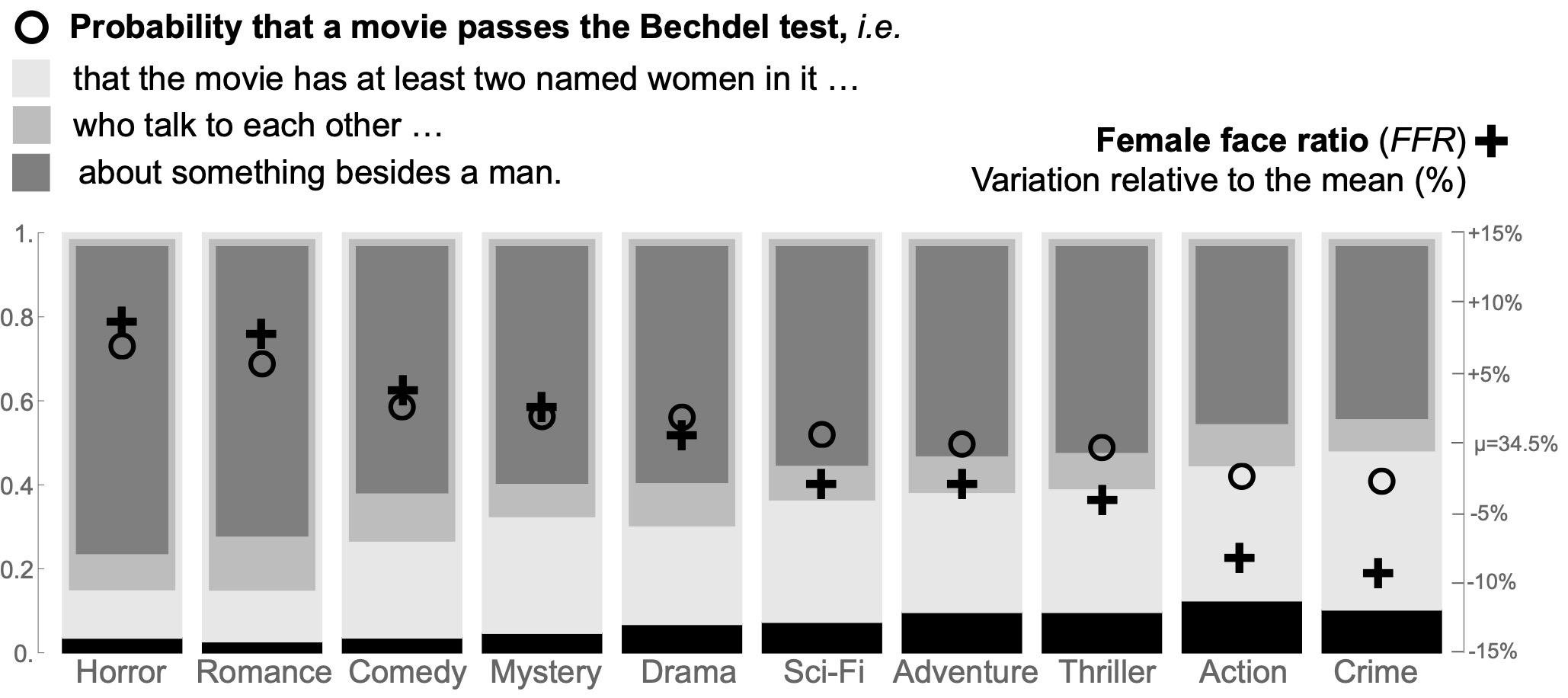

The ratio of female faces significantly varies from one movie genre to the other. Crime movies are the ones displaying the least number of female faces (31.3%) while Horror movies display the most with a ratio at 37.1% followed closely by Romances and Comedies. We used this variation to compare our metric with another famous feature called the Bechdel Test which a film passes if at least two named women talk to each other about something besides a man. It appears that the two metrics highly correlate in their variations from one genre to another, hinting at the hypothesis that counting faces might be a relevant proxy for more semantic features.

Also, we looked into IMDb’s metadata such as budget, gross and rating value, count and demographics. Does “more women in a movie” correlate with more or less money invested in or made by a movie? Is the value of the rating of a movie on IMDb by women or men indicative of the on-screen share of women? Is the number of raters as well? It appears that despite interesting patterns there is no strong correlation (positive or negative) between these features and the ratio of female faces in a movie, except for one: the ratio of female raters. This means that while the value women give to a movie does not illustrate well the number of women in a movie, the share of women among raters does.

Last words and see also

Et Voilà! We insisted in limiting our claims to what we know – the thorough use of computational methods in social sciences – and not jumping to hazardous interpretations regarding gender, the studies of which we are interested in but not expert of. The academic version of this article gives more details about our research protocol, how we handled the algorithms errors, minor results on possible gender bias in the mise-en-scène and mise-en-cadre of characters, and much more. Yet, if any question is left unanswered, please feel free to contact us by email.

You can download our dataset here.

If you would like to know more about the topics mentioned in this article, here are a few tracks to follow:

- On gender in media, Linda Busby (1975) and Rena Rudy et al. (2010) are great places to start with.

- The work of Tanaya Guha et al. (2015, 2021) has a lot of similarities with ours, with better algorithms but significantly less data (no temporal analysis, yet).

- Some activist endeavors to monitor women’s presence in media are the Geena Davis Institute (which uses Guha’s method) and the yearly GLAAD report which provide many figures and analysis.

- The work of Joy Buolamwini and Timnit Gebru (2018) and Kate Crawford and Trevor Paglen (2019) provide detailed criticism about the making and performance of image analysis algorithms.

- For a bird’s view on the usage of computational image analysis in social sciences, Taylor Arnold and Lauren Tilton (2019) might be of your interest.